Compatibility

As of version 2.0, the minimum version of Gradle that is supported by Grolifant, is 4.3.

We dropped the compatibility package for 3.0.

We also moved most of the APIs in the org.ysb33r.grolifant.api.v4 package to org.ysb33r.grolifant.core and deprecated most of the packages, classes and interfaces in org.ysb33r.grolifant.api.v4.

Each package that refers to a major Gradle version, will now have minimal APIs which will be used to load the appropriate compatibility for the specific major Gradle version.

For instance if a class is in the v4 package it will be compatibility with Gradle 4.0+.

If it appears in the v5 package then it will only be compatible with Gradle 5.0+. The same for v6, v7 etc.

Any class which appears in core is not dependent on specific Gradle versions.

In this version, each artifact has been built against a specific version deom a the Gradle major version is is meant to support.

As per usual you should not rely on anything that appears in the org.ysb33r.grolifant.internal package.

If you need anything from there please raise an issue.

Legacy V4 APIs are now available in the grolifant40-legacy-api artifact.

For the life of 2.0, these will be a transitive dependency, but come 3.0 they will be dropped and will have to be explicitly included.

In 4.0 we plan to drop 4.x support completely.

|

Configuration Cache

Version 1.0.x are the first series that are aware of the restrictions introduced by configuration caching as from Gradle 6.6. It introduces [GrolifantExtension] to help.

Bootstrapping

The library is available on Maven Central. Add the following to your Gradle build script to use it.

repositories {

mavenCentral()

}

As of Grolifant 2.0, there is no longer need too specify a specific Gradle-compatibility artifact.

Just specify the3 grolifant-herd dependency and it will automatically herd together all of the necessary

grolifants for the best Gradle compatibility.

|

dependencies {

implementation 'org.ysb33r.gradle:grolifant-herd:4.0.0'

}Utilities for Common Types

String & URI Utilities

Converting objects to strings

Use the stringize (preferable), v4.stringize or v5.stringize methods to convert nearly anything to a string or a collection of strings.

Closures are evaluated and the results are then converted to strings.

Anything that implements Callable, Provider and Supplier will also be converted.

IN case of these three, they will first be extracted and then converted.

Updating Property<String> instances in-situ.

Gradle’s Property class is two-edged sword. On the one sde it makes lazy-evaluation easier for both Groovy & Kotlin DSLs, bit on the other sides it really messes up the Groovy DSL for build script authors.

The correct way to use is not as field, but as the return type of the getter as illustraedt by the following skeleton code

class MyTask extends DefaultTask {

@Input

Property<String> getSampleText() {

this.sampleText

}

void setSampleText(Object txt) {

// ...

}

private final Property<String> sampleText

}The hard part for the plugin author is to deal with initialisation of the private field and then with further updates. This is where updatePropertyString becomes very useful as the code can now be implemented as.

class MyTask extends DefaultTask {

MyTask() {

sampleText = project.objects.property(String)

sampleText.set('default value')

}

@Input

Property<String> getSampleText() {

this.sampleText

}

void setSampleText(Object txt) {

StringUtils.updatePropertyString(project, sampleText, txt) (1)

}

private final Property<String> sampleText

}| 1 | Updates the value of the Property instance, but keeps the txt object lazy-evaluated. |

Converting objects to URIs

Use the urize method to convert nearly anything to a URI.

Path, File and URL will be correctly evaluated.

Any other objects that are convertible to strings, will effectively call toString().toURI().

Closures, Providers, Suppliers, and Callables will be evaluated and the results are then converted to URIs.

Removing credentials from URIs

Use safeURI to remove credentials from URIs. This is especially useful for printing.

File Utilities

Creating safe filenames

Sometimes you might want to use entities such as task names in file names. Use the toSafeFilename method to obtain a string that can be used as part of a filename.

Listing child directories of a directory

listDirs provides a list of child directories of a directory.

Resolving the location of a class

For some cases it is handy to resolve the location of a class, so that it can be added to a classpath.

One use case is the for javaexec processes and Gradle workers.

Use

resolveClassLocation to obtain a File object to the class.

If the class is located in a JAR it path to the JAR will be returned.

If the class is directly on the filesystem, the toplevel directory for the package hierarchy that the class belongs to, will be returned.

If you run into the java.lang.NoClassDefFoundError: org/gradle/internal/classpath/Instrumented issues on Gradle 6.5+, especially when calling a javaexec, you can potentially work around this issue by doing some substitution. Use

resolveClassLocation from the v6 package.

projectOperations.jvmTools.resolveClassLocation(

myClass, (1)

project.rootProject.buildscript.configurations.getByName('classpath'), (2)

~/myjar-.+\.jar/ (3)

)| 1 | The class you are looking for. |

| 2 | A file collection to search. The root project’s buildscript classpath is a common use case. |

| 3 | The JAR you would like to use instead. |

Obtaining the project cache directory

If a plugin might need to cache information in the local cache directory it is important that it determines this folder correctly. You can call projectCacheDirFor to achieve this.

Converting objects to files

You are probably familiar with project.file to convert a single object to a file.

Grolifant offers similar, but more powerfuul, methods.

See in file & fileOrNull for converting single objects.

It also provides versions that take a collection of objects and converts them a list of files.

See in files & filesDropNull

You can also update any Property<File> using the updateFileProperty utility method.

Downloading Tools & Packages

Distribution Installer

There are quite a number of occasions where it would be useful to download various versions SDK or distributions from a variety of sources and then install them locally without having to affect the environment of a user. The Gradle Wrapper is already a good example of this. Obviously it would be good if one could also utilise other solutions that manage distributions and SDKs on a per-user basis such as the excellent SDKMAN!.

The AbstractDistributionInstaller abstract class provides the base for plugin developers to add such functionality to their plugins without too much trouble.

Getting started

class TestInstaller extends AbstractDistributionInstaller {

@CompileStatic

class MyTestInstaller extends AbstractDistributionInstaller {

public static final String DISTPATH = 'foo/bar'

MyTestInstaller(ProjectOperations projectOperations) {

super('Test Distribution', DISTPATH, projectOperations) (1)

}

@Override

URI uriFromVersion(String version) { (2)

"https://distribution.example/download/testdist-${DISTVER}.zip".toURI() (3)

}

}

}| 1 | The installer needs to be provided with a human-readable name and a relative path below the installation for installing this type of distribution. |

| 2 | The uriFromVersion method is used to returned an appropriate URI where to download the specific version of distribution from. Supported protocols are all those supported by Gradle Wrapper and includes file, http(s) and ftp. |

| 3 | Use code appropriate to your specific distribution to calculate the URI. |

The download is invoked by calling the getDistributionRoot method.

The above example uses Groovy to implement an installer class, but you can use Java, Kotlin or any other JVM-language that works for writing Gradle plugins.

How it works

When getDistributionRoot is called, it effectively uses the following logic

File location = locateDistributionInCustomLocation(version) (1)

if (location == null && sdkManCandidateName) { (2)

location = getDistFromSdkMan(version)

}

location ?: getDistFromCache(version) (3)| 1 | If a custom location is specified, look there first for the specific version |

| 2 | If SDKMAN! has been enabled, look if it has an available distribution. |

| 3 | Try to get it from cache. If not in cache try to download it. |

Marking files executable

Files in some distributed archives are platform-agnostic and it is necessary to mark specific files as executable after unpacking. The addExecPattern method can be used for this purpose.

addExecPattern('**/*.sh') (1)| 1 | Assuming the TestInstaller from Getting Started, this example will mark all shell files in the distribution as executable once the archive has been unpacked. |

Patterns are ANT-style patterns as is common in a number of Gradle APIs.

Search in custom locations

The locateDistributionInCustomLocation method can be used for setting up a search in specific locations.

For example a person implementing a Ceylon language plugin might want to look in the ~/.ceylon folder for an existing installation of a specific version.

This optional implementation is completely left up to the plugin author as it will be very specific to a distribution. The method should return null if nothing was found.

Changing the download and unpack root location

By default, downloaded distributions will be placed in a subfolder below the Gradle user home directory as specified during construction time. It is possible, especially for testing purposes, to use a root folder other than Gradle user home by setting the downloadRoot

Utilising SDKMAN!

SDKMAN! is a very useful local SDK installation and management tool and when specific SDKs or distributions are already supported it makes sense to re-use them in order to save on download time.

All that is required is to provide the SDKMAN! candidate name using the setSdkManCandidateName method.

installer.sdkManCandidateName = 'ceylon' (1)| 1 | Sets the candidate name for a distribution as it will be known to SDKMAN!. In this example the Ceylon language distribution is used. |

Checksum

By default the installer will not check any values, but calling setChecksum will force the installer to perform a check after downloading and before unpacking. It is possible to invoke a behavioural change by overriding verification.

Only SHA-256 checksums are supported. If you need something else you will need to override verification and provide your own checksum test.

Advanced: Override unpacking

By default, AbstractDistributionInstaller already knows how to unpack ZIPs and TARs of a variety of compressions. If something else is required, then the unpack method can be overridden.

This is the approach to follow if you need support for unpacking MSIs.

There is a helper method called unpackMSI which will install and then call the lessmsi utility with the correct parameters.

In order to use this in a practical way it is better to override the unpack method and call it from there. For example:

@Override

protected void unpack(File srcArchive, File destDir) {

if(srcArchive.name.endsWith('.msi')) {

unpackMSI(srcArchive,destDir,[:]) (1)

// Add additional file and directory manipulation here if needed

} else {

super.unpack(srcArchive, destDir)

}

}| 1 | The third parameter can be used to set up a special environment for lessmsi if needed. |

In a similar fashion DMGs can be unpacked on Mac OSX platforms.

It used hdiutil under the hood.

@Override

protected void unpack(File srcArchive, File destDir) {

if(srcArchive.name.endsWith('.dmg')) {

unpackDMG(srcArchive, destDir)

// Add additional file and directory manipulation here if needed

} else {

super.unpack(srcArchive, destDir)

}

}Installing single files

In some cases, tools are supplied as single executables. terraform and packer are such examples.

Use AbstractSingleFileInstaller instead.

class TestInstaller extends AbstractSingleFileInstaller { (1)

TestInstaller(final ProjectOperations po) {

super('mytool', 'native-binaries/mytool', po) (2)

}

@Override

protected String getSingleFileName() { (3)

OperatingSystem.current().windows ? 'mytool.exe' : 'mytool'

}

}| 1 | Extend AbstractSingleFileInstaller instead |

| 2 | The version is no longer used in the constructor of the super class. |

| 3 | Implement a method to obtain the name of the file. |

You can access a downloaded single file by version. Simply call getSingleFile(version).

Advanced: Override verification

Verification of a downloaded distribution occurs in two parts:

-

If a checksum is supplied, the downloaded archive is validated against the checksum. The standard implementation will only check SHA-256 checksums.

-

The unpacked distribution is then checked for sanity. In the default implementation this is simply to check that only one directory was unpacked below the distribution directory. The latter is effectively just replicating the Gradle Wrapper behaviour.

Once again it is possible to customise this behaviour if your distribution have different needs. In this case there are two protected methods than can be overridden:

-

verifyDownloadChecksum - Override this method to take care of handling checksums. The method, when called, will be passed the URI where the distribution was downloaded from, the location of the archive on the filesystem and the expected checksum. It is possible to pass

nullfor the latter which means that no checksum is available. -

getAndVerifyDistributionRoot - This validates the distribution on disk. When called, it is passed the the location where the distribution was unpacked into. The method should return the effective home directory of the distribution.

In the case of getAndVerifyDistributionRoot it can be very confusing sometimes as to what the distDir is and what should be returned.

The easiest is to explain this by looking at how Gradle wrappers are stored.

For instance for Gradle 7.0 the distDir might be something like ~/.gradle/wrapper/dists/gradle-7.0-bin/2z3tfybitalx2py5dr8rf2mti/ whereas the return directory would be ~/.gradle/wrapper/dists/gradle-7.0-bin/2z3tfybitalx2py5dr8rf2mti/gradle-7.0.

|

Helper and other protected API methods

-

listDirs provides a listing of directories directly below an unpacked distribution. It can also be used for any directory if the intent is to see which child directories are available.

Exclusive File Access

When creating a plugin that will potentially access shared state between different gradle projects, such as downloaded files, co-operative exclusive file access. This can be achieved by using ExclusiveFileAccess.

File someFile

ExclusiveFileAccess accessManager = new ExclusiveFileAccess(120000, 200) (1)

accessManager.access( someFile ) {

// ... do something, whilst someFile is being accessed

} (2)| 1 | Set the timeout waiting for file to become available and the poll frequency. Both are in milliseconds. |

| 2 | Run this closure whilst this file is locked. You can also use anything that implements Callable<T>. |

The value returned from the closure or callable is the one returned from the access method.

Utilities for Gradle Entities

Configuration Utilities

As from 2.1, Grolifant offers a ConfigurationTools interface which is available via ProjectOperations.getConfigurations().

Creating dependency-scoped Configuration definitions

In order to simplify dependency-scoped configurations and offer backwards compatibility prior to Gradle 8.4. check out createRoleFocusedConfigurations.

Converting objects to Configuration instances

Adding configurations to tasks is a not uncommon situation. In order to help plugin authors have a more flexible way, so utilities have been added to lazy-resolve items to project configurations. Grolifant offers a method in asConfiguration to resolve a single item to a configuration. It can be provided with an existing configuration or anything resolvable to a string using stringize. If the configurations do not exist at the time they are evaluated an exception will be thrown. There is also a version that takes a collection of objects and converts them a list of configurations.

Task Utilities

Lazy create tasks

In Gradle 4.9, functionality was added to allow for lazy creation and configuration of tasks. Although this provides the ability for a Gradle build to be configured much quicker, it creates a dilemma for plugin authors wanting to be backwards compatible.

Before Grolifant 1.3, TaskProvider was provided to auto-switch between create and register, but this has been superceded by TaskTools.register.

An instance of TaskTools can be obtained via TaskTools.getTasks

|

Configure a task upon optional configuration

Sometimes a task might never registered, but if it does get registered and later created, adding configuration at that point, is a good option. For this you can use TaskTools.whenNamed

Lazy evaluate objects to task instances

The Task.dependsOn method allows various ways for objects to be evaluated to tasks at a later point in time.

Unfortunately this kind of functionality is not available via a public API and plugin authors would want to create links between tasks for domain reasons, have to find other ways of creating lazy-evaluated task dependencies.

In 0.17.0 we added TaskUtils.taskize to help relieve this problem, but from 1.3 the better solution is to use TaskTools.taskize.

// The following imports are assumed

import org.ysb33r.grolifant.api.core.ProjectOperations

projectOperations.tasks.named('foo') { Task t ->

t.dependsOn(projectOperations.tasks.taskize('nameOfTask1')) (1)

t.dependsOn(projectOperations.tasks.taskize({ -> 'nameOfTask2' })) (2)

t.dependsOn(projectOperations.tasks.taskize(['nameOfTask3', 'nameofTask4'])) (3)

}| 1 | Resolves a single item to a task within a project context. |

| 2 | Single items could be recursively evaluated if applicable, for example Closures returning strings. |

| 3 | Resolves an iterable sequence of items to a list of tasks within a project context. Items are recursively evaluated if necessary. |

The fully supported list of items are always described in the API documentation, but the following list can be used as a general guideline:

-

Gradle task.

-

Gradle TaskProvider (Gradle 4.8+).

-

Grolifant TaskProvider. (Deprecated).

-

Any CharSequence including String and GString.

-

Any Gradle Provider to the above types

-

Any Callable implementation including Groovy Closures that return one of the above values.

-

Any iterable sequence of the above types

-

A Map for which the values are evaluated. (The keys are ignored).

SkipWhenEmpty & IgnoreEmptyDirectories

In Gradle 6.8 @IgnoreEmptyDirectories was added.

In Gradle 7.x there is a deprection warning for using @SkipWhenEmpty with FileTree.

In order to keep forward-compatibility, but compile with a version prior to Gradle 6.8, a small idiom can be follwoed.

Consider that you had something like this.

@SkipWhenEmpty

@InputFiles

FileTree getSourceFiles() {

this.mySourceFiles (1)

}| 1 | Assuming that the FileTree is held in a field called mySourceFiles. |

Change the above tobe

@Internal

FileTree getSourceFiles() {

this.mySourceFiles

}Then change the constructor to include

MyTask() {

projectOperations.tasks.ignoreEmptyDirectories(inputs,this.mySourceFiles)

}It is also possible to configure using additional options and path sensitivity.

// Assume these import

import org.gradle.api.tasks.PathSensitivity

import static org.ysb33r.grolifant.api.core.TaskInputFileOptions.*

MyTask() {

projectOperations.tasks.inputFiles(

inputs,

this.mySourceFiles,

PathSensitivity.RELATIVE,

OPTIONAL, SKIP_WHEN_EMPTY, IGNORE_EMPTY_DIRECTORIES, NORMALIZE_LINE_ENDINGS

)

}JVM Utilities

Java Fork Options

There are a number of places in the Gradle API which utilises JvmForkOptions, but there is no easy way for a plugin provider to create a set of Java options for later usage. For this purpose we have created a version that looks the same and implements most of the methods on the interface.

Here is an example of using it with a Gradle worker configuration.

ProjectOperations po = ProjectOperations.find(project)

JvmForkOptions jfo = po.jvmTools.javaForkOptions

jfo.systemProperties 'a.b.c' : 1

workerExecutor.submit(RunnableWorkImpl.class) { WorkerConfiguration conf ->

forkOptions { org.gradle.process.JavaForkOptions options ->

jfo.copyTo(options)

}

}Searching the classpath

Sometimes one wants to know in which file a class is located.

This might be important as it might be necessary to put a JAR on a classpath of an external JVM process.

For this purpose there is resolveClassLocation and it comes in a number of variations.

| Variation | Description |

|---|---|

|

Simply looks for a class and returns the location which could be a file, a directory or a module. |

|

Searches for a class, then if it is found and it a JAR, look to see if it is also in the substitution path.

If so, use the location from the substitution search.

The pattern is typically the name of a JAR i.e. |

|

As the previous, but offers more control over what needs to be substituted. |

|

As before, but allows specification of what can be ignored. |

Extending Repository Handler

It is possible to add additional methods to project.repositories in a way that safely works with both Kotlin & Groovy.

See ExtensionUtils.bindRepositoryHandlerService for more details.

Extending Dependency Handler

It is possible to add additional methods to project.repositories in a way that safely works with both Kotlin & Groovy.

Repositories

As from Grolifant 0.10 implementation of new repository types that optionally also need to support credentials is available. Two classes are currently available:

-

AbstractAuthenticationSupportedRepository is a base class for plugin authors to extend.

-

SimplePasswordCredentials is exactly what the name says - a simple memory-based object containing a username and a password.

See also: Extending RepositoryHandler.

Working with Gradle Property

The Property interface introduces in Gradle 4.3 is very useful for lazy-evaluation.

For compatibility and usability purposes, Property instances should be used as private members as exposed as Provider instances on getters.

Property Providers

In Gradle 6.1, the ProviderFactory introduced methods for obtaining Gradle properties, system properties and environment variables as providers. In order to use these facilities safely you can now use the appropriate methods on ProjectOperations. In addition, for the forUseAtConfigurationTime method that was introduced in Gradle 6.5 there are variants of these methods to use similar functionality when available. Using these methods, can safeguard your plugin aginst cusage on a Gradle version where configuration-cache is enabled.

ProjectOperations grolifant = ProjectOperations.find(project)

Provider<String> sysProp1 = grolifant.systemProperty( 'some-property' ) (1)

Provider<String> sysProp2 = grolifant.systemProperty( 'some-property', grolifant.atConfigurationsTime() ) (2)

Provider<String> gradleProp1 = grolifant.gradleProperty( 'some-property' ) (3)

Provider<String> gradleProp2 = grolifant.gradleProperty( 'some-property', grolifant.atConfigurationsTime() ) (4)

Provider<String> env1 = grolifant.environmentVariable( 'SOME_ENV' ) (5)

Provider<String> env2 = grolifant.environmentVariable( 'SOME_ENV', grolifant.atConfigurationsTime() ) (6)| 1 | System property provider. |

| 2 | System property provider that is safe to read at configuration time. |

| 3 | Gradle property provider. |

| 4 | Gradle property provider that is safe to read at configuration time. |

| 5 | Environment variable provider. |

| 6 | Environment variable provider that is safe to read at configuration time. |

Working with Executables

Creating Script Wrappers

Consider that you have a plugin that already node or terraform and you want to try something on the command-line with the tool directly.

You do not want to install the tool again if you could possibly just use the already cached version.

It would be of the correct version as required by the project in any case.

You are probably very familiar with the Gradle wrapper. Now if could be nice under certain circumstances to create wrappers that will call the executables from distributions that were installed using the [DistributionInstaller]. Since 0.14 Grolifant offers two abstract task types to help you add such functionality to your plugins.

These task types attempt to address the following:

-

Create wrappers for tools be it executables or scripts that will point to the correct version as required by the specific project.

-

Realise it is out of date if the version or location of the distribution/tool changes.

-

Cache the distribution/tool if it is not yet cached.

Creating a wrapper task

Let’s assume you would like to create a plugin for Hashicorp’s Packer.

Let’s assume that you have already created an extension class which extends AbstractToolExtension and is called PackerExtension.

Let’s also assume that this class knows how to download packer for the appropriate platform which you probably implemented using AbstractDistributionInstaller.

Since 0.14 the only supported implementation is to place the template files in a directory path in resources and then substitute values by tokens. This implementation uses Ant ReplaceTokens under the hood.

Start by extending AbstractWrapperGeneratorTask.

class MyPackerWrapper extends AbstractWrapperGeneratorTask {

MyPackerWrapper() {

useWrapperTemplatesInResources( (1)

'/packer-wrappers', (2)

['wrapper-template.sh' : 'packerw', (3)

'wrapper-template.bat': 'packerw.bat'

]

)

}

}| 1 | Although this is currently the only supported method, it has to be explicitly specified that wrapper templates are in resources. |

| 2 | Specify the resource path where to find the resource wrappers. This resource path will be scanned for files as defined below. |

| 3 | Specify a map which maps the names of files in the resource path to final file names.

The format is [ <WRAPPER TEMPLATE NAME> : <FINAL SCRIPT NAME> ].

Although the final script names can be specified using a relative path, convention is to just place the file wrapper scripts in the project directory.

See example script wrappers for some inspiration. |

The next step is to provide tokens can be substituted by implementing the appropriate abstract methods.

@Override

protected String getBeginToken() { (1)

'~~'

}

@Override

protected String getEndToken() { (2)

'~~'

}

@Override

protected Map<String, String> getTokenValuesAsMap() { (3)

[

APP_BASE_NAME : 'packer',

APP_LOCATION_CONFIG : '/path/to/packer',

CACHE_TASK_NAME : 'myCacheTask',

GRADLE_WRAPPER_RELATIVE_PATH: '.',

DOT_GRADLE_RELATIVE_PATH : './.gradle'

]

}| 1 | Start token for substitution.

This can be anything.

This example uses ~~ because it matches the delimiter from the example script wrappers. |

| 2 | End token for substitution. |

| 3 | Return a map of the values for substituting into the template when creating the scripts. |

At this point you can test the task, and it should generate wrappers. However, there are a number of shortcomings:

-

When somebody clones a project that contains the wrappers for the first time, there is a good chance that none of the wrapped binaries would be cached too and when they are cached they might end up at a different location due to the environment of the user.

-

The classic place to cache something is in the project cache directory, but this can be overridden from the command-line, so special care has to be taken.

-

You might have pulled an updated version of the project and the version of the wrapped binary has been changed by the project maintainers.

Let’s start by creating a caching task first.

Creating a caching task

Create a task type that extends AbstractWrapperCacheBinaryTask.

@CompileStatic

class MyPackerWrapperCacheTask extends AbstractWrapperCacheBinaryTask {

MyPackerWrapperCacheTask() {

super('packer-wrapper.properties') (1)

this.packerExtension = project.extensions.getByType(MyToolExtensionWithDownloader) (2)

}

private MyToolExtensionWithDownloader packerExtension

}| 1 | Define a properties file that will store appropriate information about the cached binary that will be local to the project on a user’s computer or in CI. |

| 2 | As an example, we use the MyToolExtensionDownloader, that we defined in this example for creating tool wrappers. |

There are three minimum characteristics that need to be defined:

-

Version of the binary/script/distribution if it is set via

executableByVersion('1.2.3') -

The location of the binary/script.

-

Description of wrapper.

This is done by implementing three abstract methods.

@Override

protected Provider<String> getBinaryLocationProvider() {

projectOperations.providerTools.map(packerExtension.executable) { it.absolutePath } (1)

}

@Override

protected Provider<String> getBinaryVersionProvider() {

packerExtension.resolvedExecutableVersion() (2)

}

@Override

protected String getPropertiesDescription() {

"Describes the Packer usage for the ${projectOperations.projectName} project" (3)

}| 1 | Provide a path to the where the executable is located. In this case we do not need the file itself, but only the string path, as it will be written to the wrapper script. |

| 2 | Get the version of the downloadable executable. |

| 3 | A description that will be used in the properties file. |

If you execute an instance of your new task type it will automatically cache the binary/distribution dependent on how it has been defined. It will also generate a properties file into the project cache directory. This latter file should be ignored by source control, and the project cache directory should never be in source control.

The next step is to revisit the wrapper task and link it to the caching task.

Linking the caching and wrapper tasks

Return to the wrapper task and modify the constructor as follows:

@Inject

MyPackerWrapper(Provider<MyPackerWrapperCacheTask> cacheTask) { (1)

super()

this.cacheTask = cacheTask

dependsOn(cacheTask) (2)

inputs.file(

projectOperations.providerTools.map(cacheTask) {

projectOperations.fsOperations.file(it.locationPropertiesFile)

}

) (3)

useWrapperTemplatesInResources(

'/packer-wrappers',

['wrapper-template.sh' : 'packerw',

'wrapper-template.bat': 'packerw.bat'

]

)

}

private final Provider<MyPackerWrapperCacheTask> cacheTask| 1 | Restrict the wrapper task type to only be instantiated if there is an associated caching task.

Use a Provider if compatibility before Gradle 4.8 is required, otherwise a TaskProvider will suffice. |

| 2 | If the wrapper task is run, then the caching task should also be run if it is out of date. |

| 3 | If the location of the properties file have changed, the the wrapper task should be out of date. |

If you want compatibility back to Gradle 4.3 - 4.6, the above will not work as parameter injection for tasks are not available. Is this case you need to modify it accordingly

MyPackerWrapperCompatibleWithGradle40() {

super()

useWrapperTemplatesInResources(

'/packer-wrappers',

['wrapper-template.sh' : 'packerw',

'wrapper-template.bat': 'packerw.bat'

]

)

final cacheTaskName = 'packerWrapperCache'

this.cacheTask = projectOperations.tasks.taskProviderFrom(

project.tasks,

project.providers,

cacheTaskName

) as Provider<MyPackerWrapperCacheTask>

dependsOn(cacheTaskName)

inputs.file("${cacheTask.get().locationPropertiesFile.get()}") (1)

}| 1 | THese old version do no support a Provider being passed to TaskInputs.

Use a GString under Groovy or a Callable under Java. |

Now change your getTokenValuesAsMap method.

(Once again we base these tokens on the ones used in MyToolExtensionDownloader).

final ct = this.cacheTask.get()

final fsOperations = projectOperations.fsOperations

[

APP_BASE_NAME : 'packer',

GRADLE_WRAPPER_RELATIVE_PATH: fsOperations.relativePathNotEmpty(projectOperations.projectRootDir), (1)

DOT_GRADLE_RELATIVE_PATH : fsOperations.relativePath(ct.locationPropertiesFile.get().parentFile), (2)

APP_LOCATION_CONFIG : ct.locationPropertiesFile.get().name, (3)

CACHE_TASK_NAME : ct.name (4)

]| 1 | If the project uses a Gradle wrapper it is important that the tool wrapper script also use the Gradle wrapper to invoke the caching task. |

| 2 | Get the location of the project cache directory. |

| 3 | The name of the wrapper properties file. |

| 4 | The name of the cache task to invoke if either the wrapper properties file does not exist or the distribution/binary has not been cached. |

Putting everything in a plugin

It is recommended that the tasks created by convention are placed in a separate plugin and that the plugin users are recommended to only load this plugin in the root project of a multi-project.

In your plugin add the following code to the apply method.

projectOperations.tasks.register('packerWrapperCache', MyPackerWrapperCacheTask)

projectOperations.tasks.register(

'packerWrapper',

MyPackerWrapper,

[projectOperations.tasks.taskProviderFrom(project.tasks, project.providers, 'packerWrapperCache')]

)If you need compatibility back to Gradle 4.3 - 4.6, your constructor will be different

projectOperations.tasks.register('packerWrapperCache', MyPackerWrapperCacheTask)

projectOperations.tasks.register('packerWrapper', MyPackerWrapperCompatibleWithGradle40)Example script wrappers

These are provided as starter points for wrapping simple binary tools. They have been hashed together from various other examples in open-source.

#!/usr/bin/env sh

#

# ============================================================================

# (C) Copyright Schalk W. Cronje 2016 - 2024

#

# This software is licensed under the Apache License 2.0

# See http://www.apache.org/licenses/LICENSE-2.0 for license details

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

# WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

# License for the specific language governing permissions and limitations

# under the License.

# ============================================================================

#

##############################################################################

##

## ~~APP_BASE_NAME~~ wrapper up script for UN*X

##

##############################################################################

# Relative path from this script to the directory where the Gradle wrapper

# might be found.

GRADLE_WRAPPER_RELATIVE_PATH=~~GRADLE_WRAPPER_RELATIVE_PATH~~

# Relative path from this script to the project cache dir (usually .gradle).

DOT_GRADLE_RELATIVE_PATH=~~DOT_GRADLE_RELATIVE_PATH~~

PRG="$0"

# Need this for relative symlinks.

while [ -h "$PRG" ] ; do

ls=`ls -ld "$PRG"`

link=`expr "$ls" : '.*-> \(.*\)$'`

if expr "$link" : '/.*' > /dev/null; then

PRG="$link"

else

PRG=`dirname "$PRG"`"/$link"

fi

done

SAVED="`pwd`"

cd "`dirname \"$PRG\"`/" >/dev/null

APP_HOME="`pwd -P`"

cd "$SAVED" >/dev/null

# OS specific support (must be 'true' or 'false').

cygwin=false

msys=false

darwin=false

nonstop=false

case "`uname`" in

CYGWIN* )

cygwin=true

;;

Darwin* )

darwin=true

;;

MINGW* )

msys=true

;;

NONSTOP* )

nonstop=true

;;

esac

# For Cygwin, switch paths to Windows format before running java

if $cygwin ; then

APP_HOME=`cygpath --path --mixed "$APP_HOME"`

CLASSPATH=`cygpath --path --mixed "$CLASSPATH"`

JAVACMD=`cygpath --unix "$JAVACMD"`

# We build the pattern for arguments to be converted via cygpath

ROOTDIRSRAW=`find -L / -maxdepth 1 -mindepth 1 -type d 2>/dev/null`

SEP=""

for dir in $ROOTDIRSRAW ; do

ROOTDIRS="$ROOTDIRS$SEP$dir"

SEP="|"

done

OURCYGPATTERN="(^($ROOTDIRS))"

# Add a user-defined pattern to the cygpath arguments

if [ "$GRADLE_CYGPATTERN" != "" ] ; then

OURCYGPATTERN="$OURCYGPATTERN|($GRADLE_CYGPATTERN)"

fi

# Now convert the arguments - kludge to limit ourselves to /bin/sh

i=0

for arg in "$@" ; do

CHECK=`echo "$arg"|egrep -c "$OURCYGPATTERN" -`

CHECK2=`echo "$arg"|egrep -c "^-"` ### Determine if an option

if [ $CHECK -ne 0 ] && [ $CHECK2 -eq 0 ] ; then ### Added a condition

eval `echo args$i`=`cygpath --path --ignore --mixed "$arg"`

else

eval `echo args$i`="\"$arg\""

fi

i=$((i+1))

done

case $i in

(0) set -- ;;

(1) set -- "$args0" ;;

(2) set -- "$args0" "$args1" ;;

(3) set -- "$args0" "$args1" "$args2" ;;

(4) set -- "$args0" "$args1" "$args2" "$args3" ;;

(5) set -- "$args0" "$args1" "$args2" "$args3" "$args4" ;;

(6) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" ;;

(7) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" "$args6" ;;

(8) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" "$args6" "$args7" ;;

(9) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" "$args6" "$args7" "$args8" ;;

esac

fi

APP_LOCATION_CONFIG=$DOT_GRADLE_RELATIVE_PATH/~~APP_LOCATION_CONFIG~~

run_gradle ( ) {

if [ -x "$GRADLE_WRAPPER_RELATIVE_PATH/gradlew" ] ; then

$GRADLE_WRAPPER_RELATIVE_PATH/gradlew "$@"

else

gradle "$@"

fi

}

app_property ( ) {

echo `cat $APP_LOCATION_CONFIG | grep $1 | cut -f2 -d=`

}

# If the app location is not available, set it first via Gradle

if [ ! -f $APP_LOCATION_CONFIG ] ; then

run_gradle -q ~~CACHE_TASK_NAME~~

fi

# Now read in the configuration values for later usage

. $APP_LOCATION_CONFIG

# If the app is not available, download it first via Gradle

if [ ! -f $APP_LOCATION ] ; then

run_gradle -q ~~CACHE_TASK_NAME~~

fi

# If global configuration is disabled which is the default, then

# point the Terraform config to the generated configuration file

# if it exists.

if [ -z $TF_CLI_CONFIG_FILE ] ; then

if [ $USE_GLOBAL_CONFIG == 'false' ] ; then

CONFIG_LOCATION=`app_property configLocation`

if [ -f $CONFIG_LOCATION ] ; then

export TF_CLI_CONFIG_FILE=$CONFIG_LOCATION

else

echo Config location specified as $CONFIG_LOCATION, but file does not exist. >&2

echo Please run the terraformrc Gradle task before using $(basename $0) again >&2

fi

fi

fi

# If we are in a project containing a default Terraform source set

# then point the data directory to the default location.

if [ -z $TF_DATA_DIR ] ; then

if [ -f $PWD/src/tf/main ] ; then

export TF_DATA_DIR=$PWD/build/tf/main

echo $TF_DATA_DIR will be used as data directory >&2

fi

fi

exec $APP_LOCATION "$@"@REM

@REM ============================================================================

@REM (C) Copyright Schalk W. Cronje 2016 - 2024

@REM

@REM This software is licensed under the Apache License 2.0

@REM See http://www.apache.org/licenses/LICENSE-2.0 for license details

@REM

@REM Unless required by applicable law or agreed to in writing, software

@REM distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

@REM WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

@REM License for the specific language governing permissions and limitations

@REM under the License.

@REM ============================================================================

@REM

@if "%DEBUG%" == "" @echo off

@rem ##########################################################################

@rem

@rem ~~APP_BASE_NAME~~ wrapper script for Windows

@rem

@rem ##########################################################################

@rem Relative path from this script to the directory where the Gradle wrapper

@rem might be found.

set GRADLE_WRAPPER_RELATIVE_PATH=~~GRADLE_WRAPPER_RELATIVE_PATH~~

@rem Relative path from this script to the project cache dir (usually .gradle).

set DOT_GRADLE_RELATIVE_PATH=~~DOT_GRADLE_RELATIVE_PATH~~

@rem Set local scope for the variables with windows NT shell

if "%OS%"=="Windows_NT" setlocal

set DIRNAME=%~dp0

if "%DIRNAME%" == "" set DIRNAME=.

set APP_BASE_NAME=%~n0

set APP_HOME=%DIRNAME%

:init

@rem Get command-line arguments, handling Windows variants

if not "%OS%" == "Windows_NT" goto win9xME_args

:win9xME_args

@rem Slurp the command line arguments.

set CMD_LINE_ARGS=

set _SKIP=2

:win9xME_args_slurp

if "x%~1" == "x" goto execute

set CMD_LINE_ARGS=%*

:execute

@rem Setup the command line

set APP_LOCATION_CONFIG=%DOT_GRADLE_RELATIVE_PATH%/~~APP_LOCATION_CONFIG~~

@rem If the app location is not available, set it first via Gradle

if not exist %APP_LOCATION_CONFIG% call :run_gradle -q ~~CACHE_TASK_NAME~~

@rem Read settings in from app location properties

@rem - APP_LOCATION

@rem - USE_GLOBAL_CONFIG

@rem - CONFIG_LOCATION

call %APP_LOCATION_CONFIG%

@rem If the app is not available, download it first via Gradle

if not exist %APP_LOCATION% call :run_gradle -q ~~CACHE_TASK_NAME~~

@rem If global configuration is disabled which is the default, then

@rem point the Terraform config to the generated configuration file

@rem if it exists.

if %TF_CLI_CONFIG_FILE% == "" (

if %USE_GLOBAL_CONFIG%==true goto cliconfigset

if exist %CONFIG_LOCATION% (

set TF_CLI_CONFIG_FILE=%CONFIG_LOCATION%

) else (

echo Config location specified as %CONFIG_LOCATION%, but file does not exist. 1>&2

echo Please run the terraformrc Gradle task before using %APP_BASE_NAME% again 1>&2

)

)

:cliconfigset

@rem If we are in a project containing a default Terraform source set

@rem then point the data directory to the default location.

if "%TF_DATA_DIR%" == "" (

if exist %CD%\src\tf\main (

set TF_DATA_DIR=%CD%\build\tf\main

echo %TF_DATA_DIR% will be used as data directory 1>&2

)

)

@rem Execute ~~APP_BASE_NAME~~

%APP_LOCATION% %CMD_LINE_ARGS%

:end

@rem End local scope for the variables with windows NT shell

if "%ERRORLEVEL%"=="0" goto mainEnd

:fail

exit /b 1

:mainEnd

if "%OS%"=="Windows_NT" endlocal

:omega

exit /b 0

:run_gradle

if exist %GRADLE_WRAPPER_RELATIVE_PATH%\gradlew.bat (

call %GRADLE_WRAPPER_RELATIVE_PATH%\gradlew.bat %*

) else (

call gradle %*

)

exit /b 0Tool Executions Tasks and Execution Specifications

Gradle script authors are quite aware of Exec and JavaExec tasks as well as the projects extensions exec and javaexec. Implementing tasks or extensions to support specific tools can involve a lot of work. This is where this set of abstract classes come in to simplify the work to a minimum and allowing plugin authors to think about what kind of tool functionality to wrap rather than implementing heaps of boilerplate code.

Wrapping an external tool within a gradle plugin usually have three components:

-

Execution specification

-

Project extension

-

Task type

How to implement these components are described in the following sections.

As from Grolifant 2.0, the original classes in `org.ysb33r.grolifant.api.v4.runnable from Grolifant 1.1 has been deprecated and replaced with a much simpler, but more declarative solution.

The classes in org.ysb33r.grolifant.api.v4.exec that were deprecated since Grolifant 1.1 have been removed.

If you still need them, then add grolifant40-legacy-api as a dependency to your project.

Please see the upgrade guide for converting to the new classes.

|

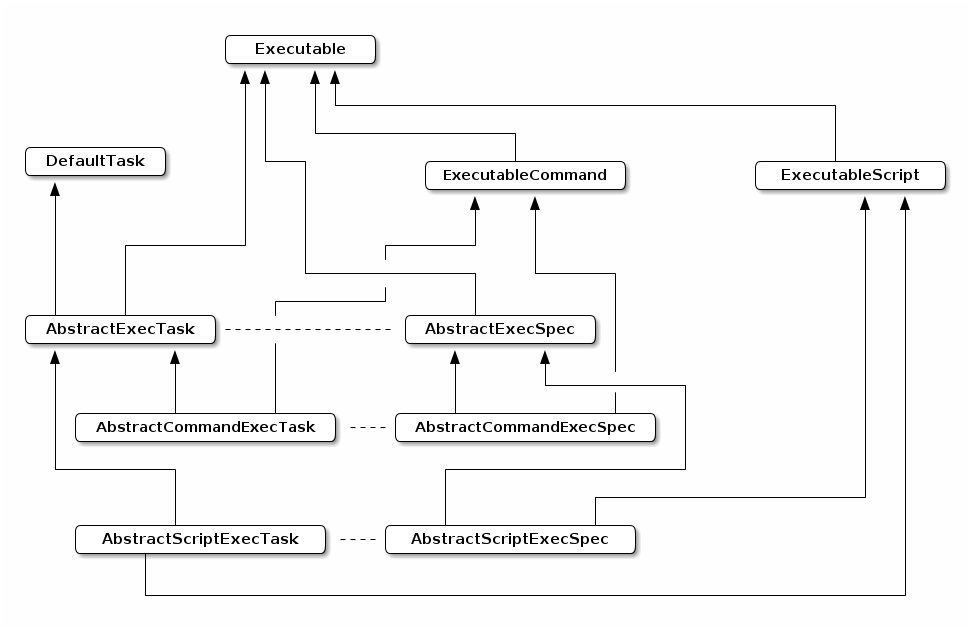

Execution specifications

Execution specifications are used for configuring the necessary details for running an external process. The latter will then be used by a task type of a project extension.

The interfaces provide the configuration DSL, whereas the abstract classes from the basis of implementing your own wrappers for external execution. In all cases you need to implement the appropriate execution specification and then implement a task class that uses that specification. Under the hood the classes will take care of putting of configuring an ExecSpec and then executing it. In most cases a AbstractExecSpec is the minimum what you’ll need to set up.

If you want to implement tasks around an executable that uses commands then you need AbstractCommandExecSpec.

AN example of this is something like terraform or `packer.

If you want to implement tasks around an executable that executes scripts then you need AbstractScriptExecSpec.

Configuring an executable

All tasks (and execution specifications) are configured the same way. Let’s assume that you have something called gitExec with which you want to run git commands.

Your Groovy-DSL will look something like this.

gitExec {

entrypoint { (1)

executable = 'git'

addEnvironmentProvider( project.provider { -> [ GIT_COMMITER_NAME : 'ysb33r@domain.example' ]}) (2)

}

runnerSpec { (3)

args 'log', '--oneline'

}

process { (4)

}

}| 1 | Configures anything that can be set in ProcessForkOptions and well as addEnvironmentProvider. |

| 2 | addEnvironmentProvider allows extertnal prvoiders to add additional environment variables into the execution environment.

These are added after the normal environment settings have been added. |

| 3 | Configures everything related to arguments. |

| 4 | Configures what is needed during and after execution of the process. It is essentially the same as hat can be configured in BaseExecSpec. |

Configuring a command-based executable

The command and its parameters are configured via an addition cmd block.

For instance you might have implemented Git as a command-based executable.

You can then configure it as follows.

gitExec {

entrypoint {

executable = 'git'

addEnvironmentProvider( project.provider { -> [ GIT_COMMITER_NAME : 'ysb33r@domain.example' ]})

}

runnerSpec {

args '-C', '/my/project'

}

cmd {

command = 'log' (1)

args '--oneline' (2)

}

}| 1 | Configures the command. |

| 2 | Configures arguments related to the command. |

The above example will result in a command line of git -C /my/project log --oneline.

Configuring a script-based executable.

In a similar fashion it is possible to wrap scripts. For instance, you might be wrapping CRuby. You can then configure it as follows.

cruby {

entrypoint {

executable = 'ruby'

}

script { (1)

name = 'rb.env' (2)

path = '/path/to/rb.env' (3)

}

}| 1 | Everything script-related is configured in the script block. |

| 2 | Use name to simply provide the script name.

In this case the implementation that you provide mut resolve the script in a suitable fashion.

For instance, a Ruby implementation might add -S to the command-line in this case |

| 3 | Provide the path to the script. In this case the path must exist and it will be passed as-is or resolved for an canononical location. |

Wrapping a tool with AbstractExecWrapperTask

The AbstractExecWrapperTask is a simplified way of abstract tools into gradlesque tasks. Unlike the other abstraction execution task types mentioned above, it does not expose the full command-line options to the build script author, but rather allows a plugin author to provide suitable functional abstractions. For instance the Terraform plugin provides a hierarchy of tasks that wrap around the Terraform executable, deals with initialisation and simplifies integration of a very popular tool into a build pipeline in a very gradlesque way.

This abstract task also relies on the creation of suitable extension derived from AbstractToolExtension. The result is a very flexible DSL. This can be illustrated by the following example which is also a good starting point for any plugin author wanting to abstract a tool in such a way.

Step 1 - Create an execution specification

The details for creating execution specifications has been described earlier. You can use the one which is best suited to your application.

In this example we are using AbstractExecCommandSpec as the base execution specification.

@CompileStatic

class MyCmdExecSpec extends AbstractCommandExecSpec<MyCmdExecSpec> {

MyCmdExecSpec(ProjectOperations po) {

super(po)

}

}Step 2 - Create the task

@CompileStatic

@CompileStatic

class MySimpleWrapperTask extends AbstractExecWrapperTask<MyCmdExecSpec> { (1)

MySimpleWrapperTask() {

super()

this.execSpec = new MyCmdExecSpec(projectOperations)

}

@Override

protected MyCmdExecSpec getExecSpec() { (2)

this.execSpec

}

@Override

protected Provider<File> getExecutableLocation() { (3)

}

private final MyCmdExecSpec execSpec

}| 1 | Extend AbstractExecWrapperTask. |

| 2 | Implement a method that will rreturn an instance of your execution specification - in this example, MyCmdExecSpec. |

| 3 | Implement a method that will return the location of the executable. It can be a name or a full path. |

Wrapping a tool with AbstractToolExtension & AbstractExecWrapperWithExtensionTask

Step 1 - Create an execution specification

Firstly create an execution specification as described in the previous section

Step 2 - Create an extension

We start with an extension class that will only be used as a project extension.

@CompileStatic

class MyToolExtension extends AbstractToolExtension<MyToolExtension> { (1)

static final String NAME = 'toolConfig'

MyToolExtension(ProjectOperations po) { (2)

super(po)

}

MyToolExtension(Task task, ProjectOperations po, MyToolExtension projectExt) {

super(task, po, projectExt) (3)

}

@Override

protected String runExecutableAndReturnVersion() throws ConfigurationException { (4)

try {

projectOperations.execTools.parseVersionFromOutput( (5)

['--version'], (6)

executablePathOrNull(), (7)

{ String output -> '1.0.0' } (8)

)

} catch (RuntimeException e) {

throw new ConfigurationException('Cannot determine version', e)

}

}

@Override

protected ExecutableDownloader getDownloader() { (9)

}

}| 1 | Derive from AbstractToolExtension.

This will provide methods for setting the executable. |

| 2 | Create a constructor for attaching the extension to a project. |

| 3 | You will also need a constructor for attaching to a task. In this case you will also need to specify the name of the project extension. By convention, always have the task and project extension as the same name. For simplicity, we’ll ignore this constructor and return to it a bit later. |

| 4 | When a user specified a tool by path or search path, we ned a different way of obtaining the version. Implement this method to perform that task. |

| 5 | Using parseVersionFromOutput is the easiest way to implement this. |

| 6 | Pass the appropriate parameters that will return output that declares the version. |

| 7 | executablePathOrNull is the easiest way to obtain the path to the executable that was set earlier. |

| 8 | Provide a parser to extract the version from output. The parser is supplied the output from running the executable with the given command-line parameters. |

| 9 | Returns an instance that will return the locaiton of an executable buy possibly downloading it if not available locally. See for more details on implementing your own. |

Step 3 - Create the task class

@CompileStatic

class MyExtensionWrapperTask extends AbstractExecWrapperWithExtensionTask<MyToolExtension, MyCmdExecSpec> { (1)

MyExtensionWrapperTask() {

super()

this.execSpec = new MyCmdExecSpec(projectOperations) (2)

this.toolExtension = project.extensions.getByType(MyToolExtension) (3)

}

@Override

protected MyCmdExecSpec getExecSpec() { (4)

this.execSpec

}

@Override

protected MyToolExtension getToolExtension() { (5)

this.toolExtension

}

private final MyCmdExecSpec execSpec

private final MyToolExtension toolExtension

}| 1 | Your task class must extend AbstractExecWrapperWithExtensionTask and specify the type of the associated execution specification as well as the type of the extension. |

| 2 | Create a execution specification that is associated with the task. |

| 3 | If the project only has one global project extension and no task extensions, the easiest is to look it up and cache the reference. |

| 4 | In most cases the implements of getExecSpec is to return an instance that was created in the constructor. |

| 5 | Return the cached extension. |

Step 3 - Apply this via plugin

Create both extension and the task in the plugin.

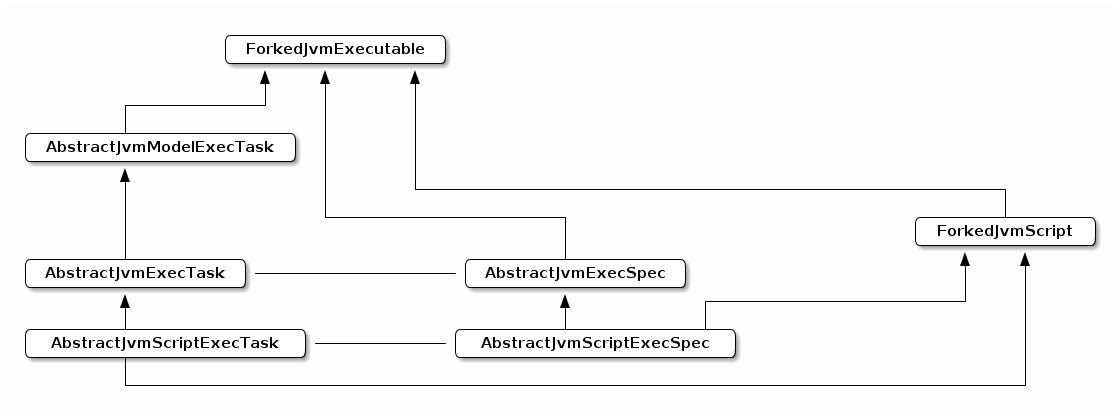

Forked JVM-based Execution

It is possibly to simplify the execution of applications on forked JVMs. Typical cases are running specific JVM-based tools or scripts of a JVM-based scripting language such as JRuby.

Execution specifications

Execution specifications are used for configuring the necessary details for running an external process.

-

AbstractJvmExecSpec is the core class to extend in order to implement JVM-based execution specifications.

-

AbstractJvmExecTask is the core class to extend in order to implement JVM-based execution tasks.

-

AbstractJvmScriptExecSpec is the core class to extend in order to implement JVM-based script specifications.

-

AbstractJvmScriptExecTask is the core class to extend in order to implement JVM-based script tasks.

Configuration

Assuming that you have a task type myJvmTask that is of a task type that extends AbstractJvmExecTask you can configure all of the relevant settings as follows:

myJvmTask {

jvm { (1)

}

runnerSpec { (2)

}

entrypoint { (3)

}

process { (4)

}

}| 1 | Configures all of the JVM fork options.

It is of type JavaForkOptionsWithEnvProvider and similar to JavaForkOptions, but with the addition of environment variable providers. |

| 2 | Configures the command-line arguments for executing the class.

This is not the JVM arguments, which need to be configured in jvm instead.

It is of type CmdlineArgumentSpec and similar in nature to the args kind of methods in an ExecSpec. |

| 3 | Configures the main class and the classpath for the execution.

It is of type JvmEntryPoint and have similar methods to mainClass and classpath that are in `JavaExecSpec. |

| 4 | Configures the actual execution of the process such as setting the streams.

It is of type ProcessExecutionSpec and is similar to BaseExecSpec, but it does not have getCommandLine & setCommandLine methods. |

In addition to the above, a script task includes a script configuration block

myJvmTask {

script { (1)

name = 'install' (2)

path = '/path/to/script' (3)

}

}| 1 | Configures the script name or path as well as any arguments. It is of type JvmScript. |

| 2 | It is possible to just specify the name of the script. In this case is up to the specific implementation on how to resolve its location. |

| 3 | It is also possible to specify a path instead of a script name. If both a name and a path is specified, the name takes preference. |

Miscellaneous

Upgrading from 3.x

3.x completely drops the grolifant40-legacy-api artifact and it no longer includes the grolifant40 artifacts by default.

If you still need to have Gradle 4.3-4.10 compatibility you should manually add grolidant to the runtime classpath.

Upgrading from 2.x

If you have still using any of the deprecated APIs from org.ysb33r.grolifant.api.v4, you should change the code to use the new APIs in org.ysb33r.grolifant.api.core.

If this is not possible, you can add the appropriate legacy dependency.

dependencies {

api 'org.ysb33r.gradle:grolifant40-legacy-api:4.0.0'

}Upgrading from 1.x

Upgrading from old Downloaders

If you have v4.downloader |

Use core.downloader class |

Notes |

- |

||

- |

||

- |

||

- |

Upgrading old Execution Tasks & Specifications

If you have v4.exec or v4.runnable class |

Use core.runnable class |

Notes |

|

Instead of passing a Project, only pass a `ProjectOperations` instance to the protected constructor. If you passed a Task and extension before, then you need to pass a Task, `ProjectOperations` and reference to the project extension of the same type. You may pass |

|

Instead of passing a Project and an Object of the executable, only pass a `ProjectOperations` instance to the protected constructor. |

||

- |

||

The new implementation does not require a version in the constructor. |

||

- |

||

- |

||

Instead of passing a Project and an Object of the executable, only pass an instance of `ProjectOperations` to the protected constructor. |

||

|

|

- |

Instead of passing a Project and an Object of the executable, only pass a `ProjectOperations` instance to the protected constructor. |

||

|

If you passed a Project before, now pass an instance of `ProjectOperations` to the protected constructor. If you passed a Task and extension before, then you need to pass a Task, `ProjectOperations` and reference to the project extension of the same type. You may pass |

|

- |

||

- |

This is no longer required if |

|

- |

If you have a method executable(Map<String, Object> opts) then you can mark it as deprecated and implement it’s routing as follows:

@Deprecated

void executable(Map<String, Object> opts) {

if (opts.containsKey('version')) {

log.warn("'${this.class.name}#executable version' is deprecated. Use executableByVersion()")

executableByVersion(opts['version'])

} else if (opts.containsKey('path')) {

log.warn("'${this.class.name}#executable path' is deprecated. Use executableByPath()")

executableByPath(opts['path'])

} else if (opts.containsKey('search')) {

log.warn("'${this.class.name}#executable searchPath()' is deprecated. Use executableBySearchPath()")

executableBySearchPath('your-exec-name') (1)

}

}| 1 | Replace with the basename of the executable. |

Upgrading Git downloaders

If you have classes in v4.git package |

Use core.git class |

Notes |

Pass a |

||

- |

||

- |

||

Pass a |

||

Pass a |

||

- |

Upgrading PropertyResolver

If you have classes in v4 package |

Use core.resolvers class |

Notes |

- |

Upgrading Wrapper Tasks

If you have classes in v4.wrapper.script package |

Use core.wrappers class |

Notes |

You will need to switch to |

||

- |

Configuration Cache Safety

Because Gradle Configuration Cache will make it impossible to use the Project instance after the configuration phase has ended, plugin authors can no longer rely on calling methods ion this class.

The only exceptions are within constructors of tasks and extensions.

Plugin authors should take extra care as to not call the Task.getProject() method anywhere except in the constructor.

In order to help with compatibility across version that has a configuration caching and those that don’t, the ProjectOperations has been introduced. It can either be used as an extension or as a standalone instance.

import org.ysb33r.grolifant.api.GrolifantExtension (1)

ProjectOperations grolifant = GrolifantExtension.maybeCreateExtension(project) (2)| 1 | Required import |

| 2 | Call this from the plugin’s apply method and pass the project instance. It will create a project extension which is called grolifant.

This method can be called safely from many plugins, as the extension will only be created once. |

ProjectOperations grolifant = ProjectOperations.create(project) (1)| 1 | Creates an instance of ProjectOperations, but does not attach it to the project.

The instance will be aware of the project, but cache a number of references to extensions which would traditionally be obtained via the Project instance.

The specific instance will depend on which grolifant JARs are on the classpath and which Gradle is actually running. |

ProjectOperations grolifant = ProjectOperations.find(project) (1)| 1 | Shortcut for fining the project extension. Will throw an exception if the extension was not attached to the project. |

Replacing existing Project methods

The following methods can be called like-for-like on the grolifant extension.

-

copy -

exec -

file -

getBuildDir -

javaexec -

provider -

tarTree -

zipTree

In addition, the following helper methods are also provided.

-

buildDirDescendant- Returns a provider to a path below the build directory. -

[

fileOrNull] - Similar tofile, but returnsnullrather than throwing an exception when the object isnullor an empty provider. -

projectCacheDir- Returns the project’s cache directory. -

updateFileProperty- Updates a file property on Gradle 4.3+, allowing behaviour to be the same as that for new methods introduced on Gradle 5.0. It is however, more powerful than the standard.set()on aProperty.

Provider Tools

In order to help with certain provider functionality not being available in earlier version of Gradle, providerTools can be utilised.

The

-

flatMap- FlatMaps one provider to another using a transformer. This allows Gradle 4.3 - 4.10.3 to have the same functionality as Gradle 5.0+.

Lazy-evaluated project version

The project.version has evolved over time and will still evolve in future Gradle releases.

In its current state (at least until Gradle 8.x), it still is not a lazy property.

It also has the quirk that if it is assigned an object, that object is evaluated using toString.

In order to deal with this, Grolifant offers getVersionProvider and setVersionProvider.

getVersionProvider returns the value of project.version within a provider.

This allows tasks to push evaluation of version os late as possible.

This might get tricky when configuration cache is involved as the project object might not be available.

Grolifant leaves that decision in the hands of the build script author.

However, it is possible for a build script author to apply a lazy-evaluated provider.

THis is done via setVersionProvider which will then also set project.version to use this provider.

In this way most version-evaluating scenarios are safely resolved.

Operating System

Many plugin developers are familiar with the OperatingSystem internal API in Gradle. Unfortunately this remains an internal API and is subject to change.

Grolifant offers a similar public API with a small number of API differences:

-

No

getFamilyNameandgetNativePrefixmethods. (A scan of the Gradle3.2.1codebase seem to yield to usage either). -

No public static fields called

WINDOWS,MACOSXetc. These are now a static field calledINSTANCEon each of the specific operating system implementations. -

getSharedLibrarySuffixandgetSharedLibraryNamehave been added. -

Support for NetBSD.

Example

OperatingSystem os = OperatingSystem.current() (1)

File findExe = os.findInPath('bash')

File findExe = os.findInPath('bash')| 1 | Use current() to the operating system the code is being executed upon. |

Operating system detection

The logic in 4.0.0 to determine an operating system is

static OperatingSystem current() {

if (OS_NAME.contains('windows')) {

return Windows.INSTANCE

} else if (OS_NAME.contains('mac os x') || OS_NAME.contains('darwin') || OS_NAME.contains('osx')) {

return MacOsX.INSTANCE

} else if (OS_NAME.contains('linux')) {

return Linux.INSTANCE

} else if (OS_NAME.contains('freebsd')) {

return FreeBSD.INSTANCE

} else if (OS_NAME.contains('sunos') || OS_NAME.contains('solaris')) {

return Solaris.INSTANCE

} else if (OS_NAME.contains('netbsd')) {

return NetBSD.INSTANCE

}

// Not strictly true, but a good guess

GenericUnix.INSTANCE

}Contributing fixes

Found a bug or need a method? Please raise an issue and preferably provide a pull request with features implemented for all supported operating systems.

Git Cloud Provider Archives

In a slightly similar fashion to Distribution Installer it is possible to download archives of GitHub & GitLab repositories.

For this there is GitHubArchive & GitLabArchive.

Both implement GitCloudDescriptor.

This is then complimented with GitRepoArchiveDownloader.

The latter does the actual downloading work.

It exposes the root directory of the unpacked archive using the getArchiveRoot method.

You can mix these classes in a similar fashion where Distribution Installer is used.

A primary use case might be the download of resources from a cloud Git provider. This includes templates for Reveal.js, styling for Asciidoctor-pdf, extensions for Antora and many more.

Simplified Property Resolving

Taking an idea from Spring Boot configuration, this class provides out-of-the-box functionality to resolve a property by looking at the Gradle project properties, Java system properties and the environment.

resolver.get('a.b.c') (1)

resolver.get('a.b.c','123') (2)

resolver.get('a.b.c', resolver.SYSTEM_ENV_PROPERTY) (3)

resolver.get('a.b.c', '123', resolver.SYSTEM_ENV_PROPERTY) (4)

resolver.provide('a.b.c') (5)

resolver.provide('a.b.c','123') (6)

resolver.provideAtConfiguration('a.b.c') (7)

resolver.provideAtConfiguration('a.b.c','123') (8)

resolver.provide('a.b.c', '123', resolver.SYSTEM_ENV_PROPERTY, false) (9)

resolver.provide('a.b.c', '123', resolver.SYSTEM_ENV_PROPERTY, true) (10)| 1 | Search for property a.b.c in the order of project property & system property and then for environmental variable A_B_C. |

| 2 | Search for property, but result default value if none was found. |

| 3 | Use a different search order. Anything that implements PropertyResolveOrder can be specified. |

| 4 | Combine a default value with a different search order. |

| 5 | A provider of a property. |

| 6 | A provider of a property with a default value. |

| 7 | A provider of a property that can be used at configuration time. |

| 8 | A provider of a property with a default value that can be used at configuration time. |

| 9 | A provider of a property with a default value, and a different order, which cannot be used at configuration time. |

| 10 | A provider of a property with a default value, and a different order, which can be used at configuration time. |

What’s in a name

Grolifant is a concatenation of Gr for Gradle and olifant, which is the Afrikaans word for elephant. The latter is of course the main part of the current Gradle logo.

Who uses Grolifant?

The following plugins are known consumers of Grolifant:

If you would like to register your plugin as a Grolifant user, please raise and issue (and preferably a merge request).